ExtraMile by YourTechDiet has brought you another insightful Q&A session highlighting the prevalent technologies and trends that have redefined business operations. For today’s session, we are delighted to feature Francesco Tisiot, the Field CTO and Head of DevEx of Aiven, an AI-ready open-source data platform.

Aiven is a leader in managed data services with the aim of assisting businesses in getting more value from their data. Francesco has been an integral member of the company’s tech leadership team for more than four years. In the discussions, we will cover an expedition from Francesco’s journey in the tech spectrum to the future of managed data services and the role of AI in data management.

Our guest, Francesco, has extensive experience in controlling open-source data platforms like Apache Kafka, PostgreSQL, and Apache Flink. Notably, Aiven is a leading provider of Apache Kafka and PostgreSQL. Alongside exploring insights on these platforms, we’ll also try to understand the emergence of the Bring Your Own Cloud (BYOC) model.

Welcome, Francesco; we’re super excited to have you with us today!

1. You have been a tech leader for a while now. What excites you about technology and inspired you earlier to establish a career in this field?

Francesco. My journey toward a career in technology was sparked by the early challenges I faced as a student. I struggled academically until middle school, to the point where it was suggested that I attend a vocational school. There, I discovered computer science. I was still the same student, but for some reason, everything suddenly fell into place. I understood mathematics and physics immediately. It simply resonated with me. After high school, I earned a degree in Computer Science Engineering at university and soon began my career in the technology industry.

The opportunity to bridge humanity and technology fascinates and drives me. One without the other seems incomplete. For the most part, my career in technology has been about exploring the relationship between humans and technology. I have been a consultant in business intelligence where you have to develop technology to meet the needs of of the stakeholder, to Developer Advocate where I bridged the gap between the Developer and technology, Then as a Field CTO, where achieving the customers KPIs is of more concern than any particular feature of the technology, and to now as the head of DevEx, where we leverage technology to create a solution that enables people to bridge the gap between their intent and what the software can do to achieve the desired business outcome.

2. Congratulations on Aiven’s achievement of the title, “2025 Google Cloud Partner of the Year in Databases – Data Management”. How do such acknowledgments help the company to perform better and more effectively?

Francesco. Our relationship with Google is not unlike a symbiotic relationship found in biology, where both organisms support each other’s evolution. To understand this, you must recognize that data in any company is not a fixed point in time; it is always in a state of flux. For example, you collect data at the point of sale, when you first hire an employee, or facilitate a financial transaction. You take the initial data from the environment and manipulate it as it flows to create analytics. To be successful, you must understand and fulfill the entire data journey, examining each step to ensure the next one is correct, and do so in near real-time.

For customers, some data on this journey can be processed and manipulated on the Aiven platform, and other data can be processed and manipulated on Google, depending on the task at hand. Aiven processes data in ways Google cannot and vice versa. Still, the real power of our relationship lies in the fact that customers can port their data to other clouds from Google, effectively connecting clouds in an agnostic manner.

From a technological perspective, it has helped us because we have built a stronger relationship with Google through a new product, Aiven for AlloyDB Omni, which we sell in conjunction with Google. This provides Google and Aiven customers with an enterprisegrade, PostgreSQL-compatible solution fully managed by Aiven and optimized for AIpowered search applications. Customers can build and deploy on Google Cloud Platform (GCP) and then port a suite of solutions to other clouds to extend their capabilities to data residing there. This is a unique solution that delivers high performance, reliability, and flexibility, with deployment time under 15 minutes. GCP customers can now easily and efficiently leverage intelligent search in any cloud environment to achieve business objectives. Again, a solution that connects technology with humans to create a positive outcome.

3. What is the significance of data in today’s business atmosphere? How does data help companies make informed decisions?

Francesco. The American engineer W. Edwards Deming said that without data, you’re just another person with an opinion. Therefore, we must again understand the entire data journey to create a solution that effectively addresses the problem. At Aiven, we examine the past and current data available to inform the development of a product or feature, as well as the analytics, so you can swiftly and efficiently move towards a benefit and fine-tune it for even better results.

Today, every company should be focused on having this entire data journey mapped out from end to end. Data is constantly in motion and changing. When the journey is mapped out, you can control your data and respond appropriately, or program an Agentic AI solution that marries several complex tasks to create a solution. This is critical in today’s world, where the scale of change initiated by humans is massive. For example, a single mention from a celebrity influencer can trigger a significant shift in purchase habits in seconds.

Companies need to respond accurately to this phenomenon, create and analyze the data, and build pipelines that move the data from an operational data set to an analytical data set, all in near real-time, for a satisfactory outcome.

Let’s say that 10 years ago, you were running a shop selling shoes. You were okay with checking the inventory at the end of the week and reviewing what was left based on sales.

You could rely on yesterday’s data and not today’s. However, with ever-changing customer behaviors, you no longer have the luxury of relying solely on yesterday’s data; you need to make decisions based on data from just two hours or even minutes ago. We need to be on top of the data, changes, and patterns as soon as they occur.

4. How does AI help optimize and manage large databases? What are the associated challenges of AI integration for data optimization?

Francesco. So, if we consider the traditional database approach, it involves waking up every morning and trying to discover what is happening in the databases. What AI can do, and is already doing well, is summarize everything that has been working or not working over the last day.

It can pinpoint flows, note usage peaks, and help you quickly understand what’s happening and has happened contextually. The AI also suggests the next steps. It will then instruct you to create an index here, rewrite your SQL, or remove some indexes in this space. AI allows for faster, more efficient, and meaningful management of your database, and hence, your data.

There is, I believe, a trade-off about how much we want AI to do by itself and how much we want to keep control over our infrastructure and databases. The trade-off is often between performance stability and continuous optimization. Suppose you ask a person responsible for a production database if they would prefer stable performance, non-optimal, or optimal performance at every point in time. However, it could be non-optimal when AI gets it wrong for 10, 30, or 50 seconds.

I think 90% of the DBS operators will tell you that optimal performance, rather than nonoptimal, stable performance, is way better. This is where we can empower AI to make thechanges and continually optimize the database. And we need to offer that as an option.

More tech-savvy companies should be able to decide when to implement the changes AI suggests. On the other hand, companies new to the game may be willing to accept a little more risk to achieve better performance; we should simply enable the automatic suggestion to be applied to the production environment. I believe AI can accomplish a great deal. It is where we set the level of trust and autonomy that needs to be defined. A slide button that allows for more or less independence, depending on the user, is a good way to understand this concept.

5. PostgreSQL refers to an advanced data management system. How does Aiven manage PostgreSQL databases while maintaining high performance?

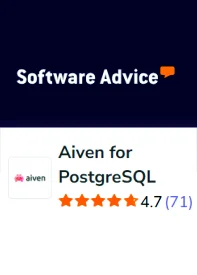

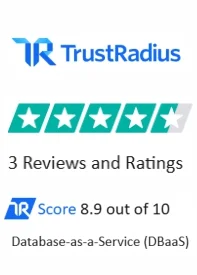

Francesco. Aiven’s founders were seasoned PostgreSQL consultants who had grown tired of repeatedly solving the same problem. So, they decided to create a PostgreSQL database platform, and PG is now becoming a popular database among developers and being adopted swiftly by both large and small enterprises. Most production databases require monitoring and maintenance plans to manage growth effectively. Still, PostgreSQL databases can grow faster than the actual data suggests because they better manage, or self-manage, data volume, MVCC overhead, indexes, storage, WAL, write-ahead logging, and more.

Aiven has extensive experience and PG experts in-house who have built a highly stable, reliable, and scalable cloud-agnostic data platform. Database managers sleep better knowing we won’t crash and they won’t have to replatform, an absolute nightmare, due to data ceiling limits. And we manage it for you. If you manage your database, you are on duty 24/7. We have automations that, if we detect that a node is not functioning properly, we will initiate the replacement of the node without disrupting production.

And Aiven runs on Aiven. We can trust Postgres because our company and our livelihood depend on it. We ensure that everything we deliver is of the highest quality, every time, because we are experts in Postgres and have implemented a system of checks and balances to maintain consistent quality. The same applies to Kafka and the other tools and solutions we offer our clients. We are the experts by default.

6. Aiven is a leading provider of Apache Kafka. What are the major features and benefits of this service?

Francesco. Apache Kafka is a streaming service used by enterprises of all sizes that glues things together in real time. Kafka is used wherever you need to integrate microservices. For example, one microservice produces an order, and another validates the order against the inventory. In the past, we were just sticking them together. But if one is off, you cannot fulfil the order. You cannot close the order. You cannot give a reply, you can’t even acknowledge the order.

But with Apache Kafka, you can put all these microservices into one message. So, when the microservice that checks against the inventory wakes up, it realizes the request, processes the proper response, and sends it back to Kafka in real-time. It creates a seamless mailing system between microservices that scales services in real-time. So we are a leading service provider for Apache Kafka. There are very few companies. It’s become the default for streaming now; any streaming use case utilizes Apache Kafka, and they have won the streaming battle. Another key feature is that it is highly scalable and tech-agnostic. You can send everything you want through Kafka, and it will be sent to the other side. It features a component called Kafka Connect, which enables you to connect Kafka to other systems. For instance, if you have a database, you can attach Kafka Connect to the database and stream the data to Kafka. Conversely, you have a service that sends email alerts or Slack messages. You can connect that through Kafka. Therefore, the connectivity piece resolves many of the integration issues between components, and the integration piece scales in proportion to Kafka’s scalability.

However, with any tool, there can be limitations that require attention when you move from initial adoption to scaling with massive data volumes. We have just released the Kafka Improvement Process, or KIP, as an open-source project. It’s a way to detach from storage and move Kafka to the cloud; it’s gaining more and more attention in the open-source world. We just released it a couple of weeks ago.

7. What is your take on the enhanced adoption of the Bring Your Own Cloud (BYOC) model? How can such deployment models be advantageous for businesses? It would be more enriching for our audience if you could share any case studies in this regard.

Francesco. The Bring Your Own Cloud model makes a lot of sense, as it increases the opportunity to use your data securely for specific use cases in environments beyond your commitment to your cloud vendor. Say you are a race car driver or team, and you drive a Porsche, but for a particular race, you need a McLaren to meet the requirements of the track, and that specific track, so you get permission to drive the McLaren for the race, and you win. The BYOC model allows enterprises to do just that in cloud environments. It is secure and mitigates costs for specific use cases while extending possibilities for success. It plays to Aiven’s cloud-agnostic approach, which creates greater efficiencies.

8. What is the future of open-source managed data services? What trends and difficulties can this impact this industry in the upcoming years?

Francesco. Firstly, open source clearly won the battle regarding data. A traditional database is like a black box that allows you to analyze and compute data, but it’s not easy to extract the data as it’s in a specific format. Apache Iceberg, on the other hand, is an open-source table format for massive analytic datasets. It was initially developed at Netflix and later donated to the Apache Software Foundation. So anyone with an Iceberg folder can read my data and vice versa. People do not want to be restricted to a specific technology, so open-source has emerged as the preferred choice. People want to be able to choose the particular technology that best suits their needs and still have a baseline of data available across all technologies, right? For the future of managed services, this means that while data formats will remain open, managed data services will be a layer on top of them, where the data format will remain the same; what will change is the developer experience.

Secondly, there is a growing use of AI to manage large, increasingly complex datasets and workloads, which are occurring seemingly faster each day. Data begets data, and being able to provide managed services that meet this growth with reliability and accuracy will require more complex AI and Agentic AI models trained to meet this challenge. And meet this challenge without a significant impact on production.

So the future of managed services lies in further developing open-source platforms with carefully constructed and managed AI applications to meet the massive workloads of today and tomorrow.

Check Out Our Other Informative Interviews: